The Ultimate Guide to Building AI Agents

Start Building Smarter AI Agents With the Right Use Case

Don’t waste time building agents that won’t scale. This guide reveals where AI agents drive tangible ROI (and where they don’t) with real-life use cases.

Download the guide for a full walkthrough:

-

Which AI agent use case will bring my team the most value?

-

How do I get agents beyond trials?

-

What are some examples of successful AI agent use cases?

Trusted by 1 in 4 of the World's Top Companies.*

*Top 500 companies of the 2024 Forbes Global 2000, excluding China

Download the Guide

AI Agents Use Cases That Drive Real ROI

The 5-Step Framework to High ROI AI Agents

Follow this proven framework to pick, build, and ship your first agents fast.

Not Sure Where to Begin With AI Agents?

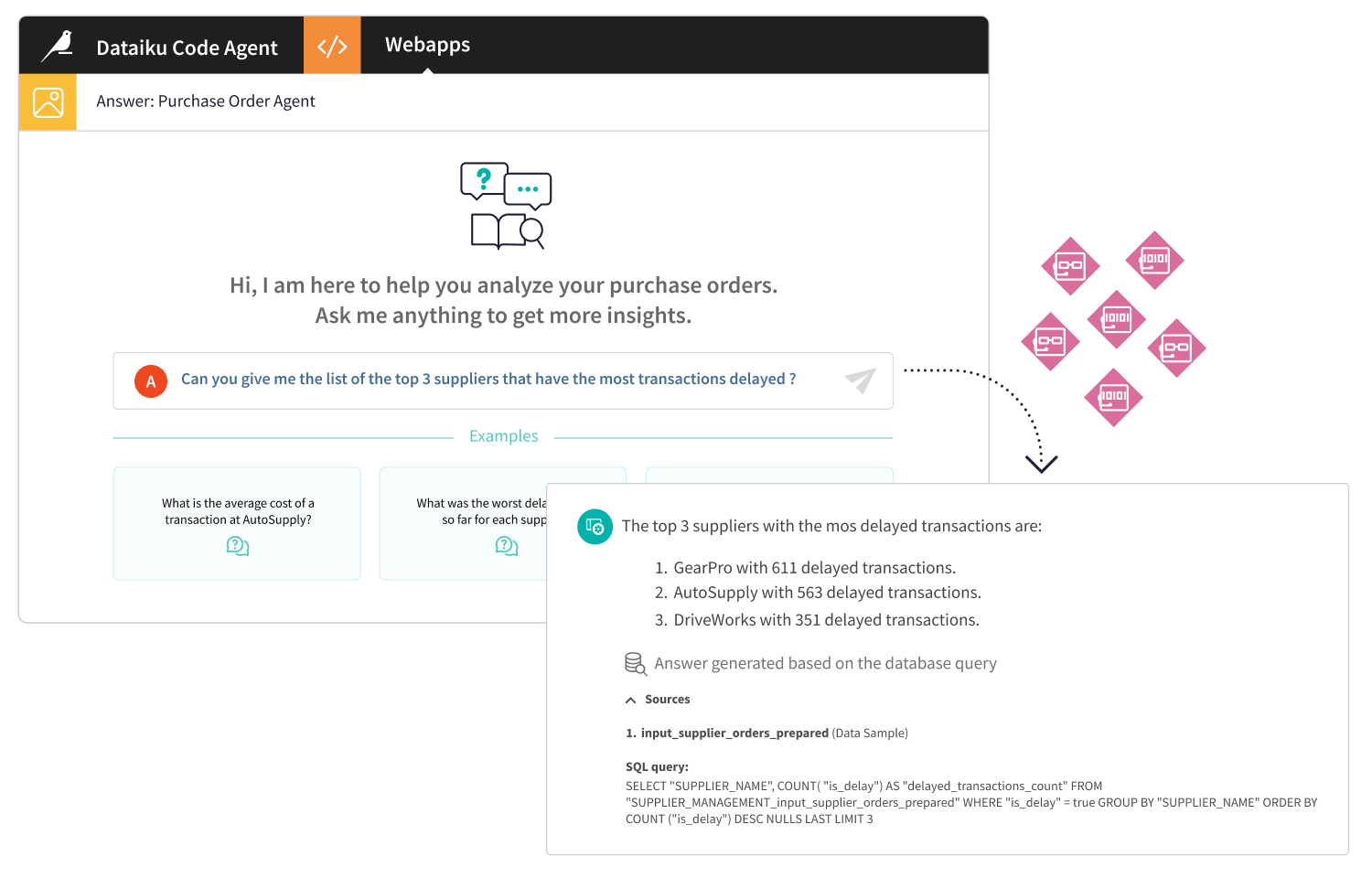

Explore eight real-world AI agent use cases that boost expertise, streamline knowledge-heavy work, and run security, all built in Dataiku.

Prioritize What Pays Off

Score every agent idea by ROI potential, implementation complexity, and user readiness so you know exactly what to build first and can scale high-impact agents quickly.

Example Inside: Predictive Maintenance Agents

Example Inside: Clinical Trial Intelligence Agents

Dataiku: Where AI Agents Come to Life at Scale

Dataiku makes it simple for teams to build, deploy, and manage AI agents in one unified, governed platform. From visual and code-based creation tools to built-in governance and the Dataiku LLM Mesh for cost, quality, and security control, Dataiku makes building robust agents seamless.

-1.png?width=1250&height=700&name=GE%20(1)-1.png)

-1.png?width=1250&height=700&name=Michelin%20(1)-1.png)